Facebook examines moderation policies after pressure over Palestine content

2022-05-20 12:14

Meta headquarters in Menlo Park, California. AFP

by Layla Maghribi- thenationalnews

Facebook has said it is closely reviewing the application of its content moderation policies, taking on board activist and user representations that there is bias in its removals decisions.

Richard Lappin, Meta’s head of content policy in Europe and the Middle East, told the Palestine Digital Activism Forum that the company had “extensive processes” policies on content moderation which were “designed to be agnostic”, but that “it was important to hear views and feedback from all engaged communities”.

Mazuba Haanyama, Meta’s head of human rights, public policy, Africa and Middle East & Turkey, told listeners at the forum that an internal review had started but it was “a long process” and the company “hoped to share results soon”.

“In line with our human rights policy, we are conducting due diligence and want to ensure that we act upon them. There is no intention to put this on the shelf to gather dust,” said Ms Haanyama.

Mona Shtaya, advocacy adviser at The Arab Centre for the Advancement of Social Media, told The National that Meta had generally failed to give “clear answers to the questions raised”, including on how Facebook comes up with its dangerous organisations and individuals list.

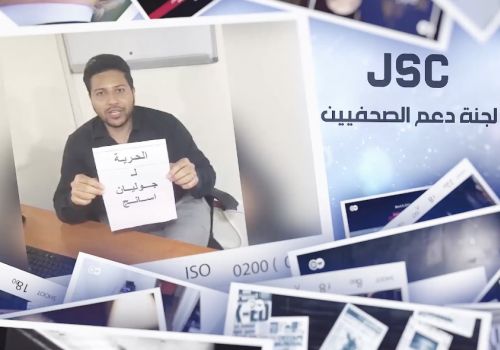

At stake is the social media giant's role as a “truly global platform” when the number of instances of “bias” in its content moderation, particularly towards Palestinians, is accumulating rapidly.

Palestinian have been complaining for years that both Facebook and Instagram censor their experiences and narratives.

Last month, Dutch-Palestinian model Bella Hadid claimed she was “shadow banned” by Instagram for posting about Palestine, only hours after Israeli authorities attacked worshippers at Al Aqsa Mosque in Old Jerusalem.

“My Instagram has disabled me from posting on my story — pretty much only when it is Palestine based I’m going to assume,” Ms Hadid, who has 51 million followers, wrote on her Instagram story in April. “When I post about Palestine I get immediately shadow banned and almost 1 million less of you see my stories and posts. ![]()

Palestinians are increasingly saying that their digital rights are being violated by social media platforms. Photo: Screengrabs from Bella Hadid's Instagram account

The high-profile model is far from being the only person to complain about this issue. During the Israeli-Palestinian conflict in Gaza last May, Palestinian activists said their posts on multiple social media platforms, including Facebook, Instagram and Twitter, were being deleted and their accounts suspended, often without explanation.

About 55 Palestinian names are banned entirely from being mentioned on Facebook, including in journalistic work, and only two Israeli names are on the same list.

The killing of veteran American-Palestinian journalist Shireen Abu Akleh during an Israeli raid in Jenin — an event that was captured on video and watched all over the world — exposed another aspect of Facebook’s “system of double standards”, says Ms Shtaya.

“When Israelis started sharing a video that wrongly stated that she was killed by Palestinians, we reported it to Facebook and asked them to mark it as disinformation. They refused and told us it didn’t violate their community standards.”

Critics say Arabic content is viewed with excessive suspicion by Facebook moderators and flagged more readily through keywords that activate automatic takedowns through its artificial intelligence programme, but that the same rigour is not applied to the Hebrew language.

Is Meta operating double standards on content moderation?

In September 2021, Meta’s oversight board, an independent body that monitors and reviews Facebook’s freedom of expression policy and actions, called for an independent review into alleged bias in the tech giant's moderation of Palestinian and Israeli posts.

It recommended that content reviewers should not be “associated with either side of the Israeli-Palestinian conflict” and that the company should examine both human and automated content moderation in Arabic and Hebrew and make its findings public.

Thomas Hughes from the oversight board said he was disappointed that Meta’s internal review report, which had been expected to be released this month, was delayed.

“Sometimes the pace is not as quick as we would like … but there are changes being made,” said Mr Hughes. “Issues around bias on content moderation are very difficult and incredibly complex so we would rather it was done right than quickly.”